Cost. I have been fortunate to work for and help migrate one of Australia’s leading websites (seek.com.au) in to the cloud and have worked for both large public cloud vendors. I have seen the really good, and the not so good when it comes to architecture.

Cloud and cost. It can be quite a polarising topic. Do it right, and you can run super lean, drive down the cost to serve and ride the cloud innovation train. But inversely do it wrong, treat public cloud like a datacentre then your costs could be significantly larger than on-premises.

If you have found this post, I am going to assume you are a builder and resonate with the developer, architect persona.

It is you who I want to talk to, those who are constructing the “Lego” blocks or architecture, you have a great deal of influence in the efficiency of one’s architecture.

Just like a car has an economy value, there are often tradeoffs. Have a low liters per (l/100km – high MPG for my non-Australian friends), it often goes hand in hand with low performance.

A better analogy is stickers on an appliance for energy efficiency

How can we increase the efficiency of their architecture, without compromising other facets such as reliability, performance and operational overhead.

This is what the aim of the game is

There is a lot to cover, so in this multi-part blog series I am going to cover quite a lot in a hurry, many different domains and the objective here is to give you things to take away, and if you read this series and come away with one or two meaningful cost-saving ideas that you can actually execute in your environment, I’ll personally be exceptionally that you have driven cost out of your environment

Yes, you should be spending less

In this multi part series I we’re going to cover three main domains

- Operational optimization,

- Infrastructure optimization (Part 2)

- Architectural optimizations (Part 2 – with a demo)

With such a broad range of optimisations, hopefully something you read here will resonate with you. The idea here is to show you the money. I want to show you where the opportunities for savings exist

Public cloud if full of new toys for us builders which is amazing (but it can be daunting). New levers for all of us and with the hyperscale providers releasing north of 2000 (5.x per day) updates per day, will all need to pay attention and climb this cloud maturity curve.

Find those cost savings and invest in them, but the idea is to show you where you can find the money.

I want to make this post easy to consume, because math and public cloud can be complex at times, services may have multiple pricing dimensions.

My Disclaimer

In this post I will using Microsoft Azure as my cloud platform of choice, but the principles apply to many of the major hyper scalers (Amazon Webservices and Google Cloud Platform)

I will be using the Microsoft Azure region “Australia-East (Sydney)” because this is the region I most often use, and pricing will be in USD. Apply your own local region pricing but the concepts will be the same and the percentage savings will likely be almost exactly the same, but do remember, public cloud costs are often based on the cost of doing business in a specific geography.

Lastly, prices are a moving target, this post is accurate as of April 2022.

Cloud – A new dimension

When we look through the lens of public cloud if has brought us all a new dimension of flexibility, so many more building blocks. How are you constructing them?

When we as builders talk about architecture, we will often architect around a few dimensions, some more important than others, depending on your requirements.

Commonly we will architect for availability, for performance, for security for function, but I would like to propose a new a new domain for architecture, and that is economy.

When you’re building your systems, you need to look at the economy of your architecture because today in 2022, you have a great deal of control over it. New frameworks, new tools, new technologies, new hosting platform’s, new new new.

What I mean by economy of architecture is as simple as this

The same or better outcome for a lower cost

I will show you how we can actually do this. I’m talking about the ability to trial and change the way a system is built during its own lifetime.

As architects, developers, builders we need to move away from this model of heavy upfront design or some finger in the air predictions of what capacities a solution needs.

But instead, embrace the idea of radical change during and application lifecycle funded by cost savings.

Yes, there’s degrees of which you can do this depending on whether you built the system yourself or you’re using a COTS (Commercial-Off-The-Shelf-Software) but I will walk through options that you can apply to your existing stacks on what is possible.

How Are You Keeping Score?

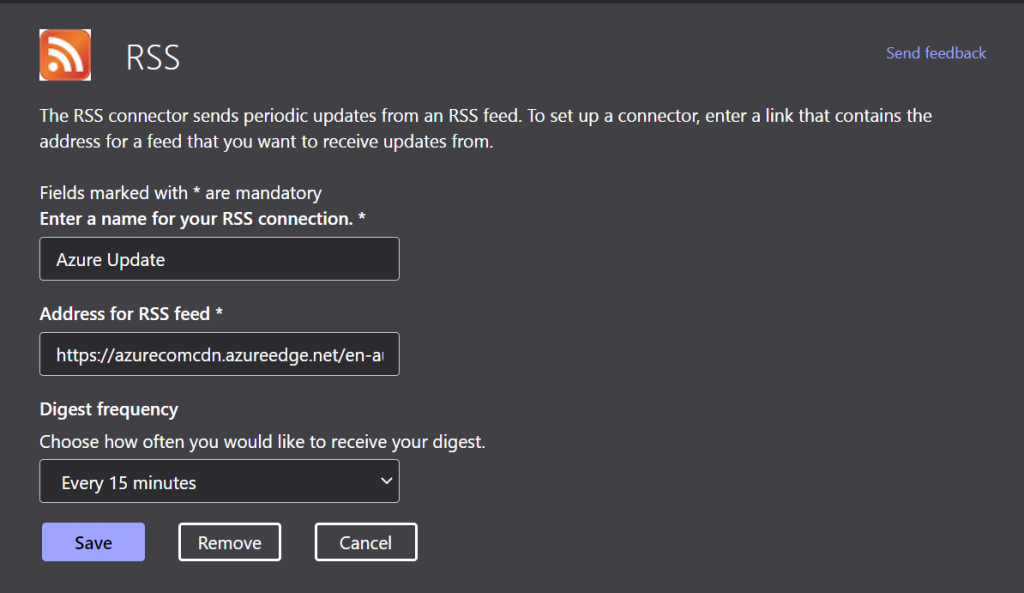

Even with COTS, there are options. Have you noticed the appearance of new Lego blocks in the form of updates? Do you have a mechanism in place to be kept aware of updates? If you do, then thats great, but if you don’t, let me share with you how I do and have done this in the past.

Here are two mechanisms I have used for both Azure (Into Microsoft Teams) and Amazon Web Services (Into Amazon Chime via a Python Lambda Function) but you could easily integrate to SLACK, email and beyond

import boto3

import feedparser

import json

import decimal

import requests

import os

import time

from boto3.dynamodb.conditions import Key, Attr

from botocore.exceptions import ClientError

# Helper class to convert a DynamoDB item to JSON.

class DecimalEncoder(json.JSONEncoder):

def default(self, o):

if isinstance(o, decimal.Decimal):

if o % 1 > 0:

return float(o)

else:

return int(o)

return super(DecimalEncoder, self).default(o)

def process_entry(RSSEntry, table, webhook_url):

try:

print 'processing entry: ' + RSSEntry['id']

get_response = table.get_item(

Key={

'entryID': RSSEntry['id']

}

)

except ClientError as e:

print(e.response['Error']['Message'])

else:

try:

result = get_response['Item']

print 'key found ' + RSSEntry['id'] + ' No further action'

except KeyError:

print 'no key found, creating entry'

write_entry_to_hook(RSSEntry, webhook_url)

put_response = table.put_item(

Item={

'entryID': RSSEntry['id']

})

print 'writing entry to dynamo: ' + RSSEntry['id'] + ' ' + RSSEntry['title']

def write_entry_to_hook(RSSEntry, webhook_url):

print 'writing to webhook: ' + RSSEntry['id']

hook_data = {'Content': RSSEntry['title'] + ' ' + RSSEntry['link']}

print hook_data

url_response = requests.post(webhook_url, data=json.dumps(hook_data), headers={'Content-Type': 'application/json'})

print url_response

time.sleep(2)

def lambda_handler(event, context):

webhook_url = os.environ['webhook_url']

rss_url = os.environ['rss_url']

dynamodb = boto3.resource("dynamodb", region_name='ap-southeast-2')

table = dynamodb.Table('WhatsNewFeed')

d = feedparser.parse(rss_url)

for x in d['entries']:

process_entry(x,table,webhook_url)

#entryID = d['entries'][0]['id']

lambda_handler('test', 'test')

The Basics

Show me the money I say. I will show you the money, and as a bit of a teaser here are some of the percentage savings you will be able to make. I am not talking about 1 or 2%’ers, these are sizable chunks you can remove from your bill.

I don’t want to frame this as a basic post, again I am about the builders. but then again there is the obvious stuff. This is the ‘Do Not Pass Go, Do Not Collect 200 Dollars’ advice I am talking about fundamentals in cloud and also wider to IT and Software Development

You can’t improve what you can’t measure. So, I ask you all, do you know what your per transaction cost is?

We are talking fundamentals in cloud here.

What is your per transaction cost? Do you know what cost to serve is?

If you do, well done, but if you don’t then how can you improve?

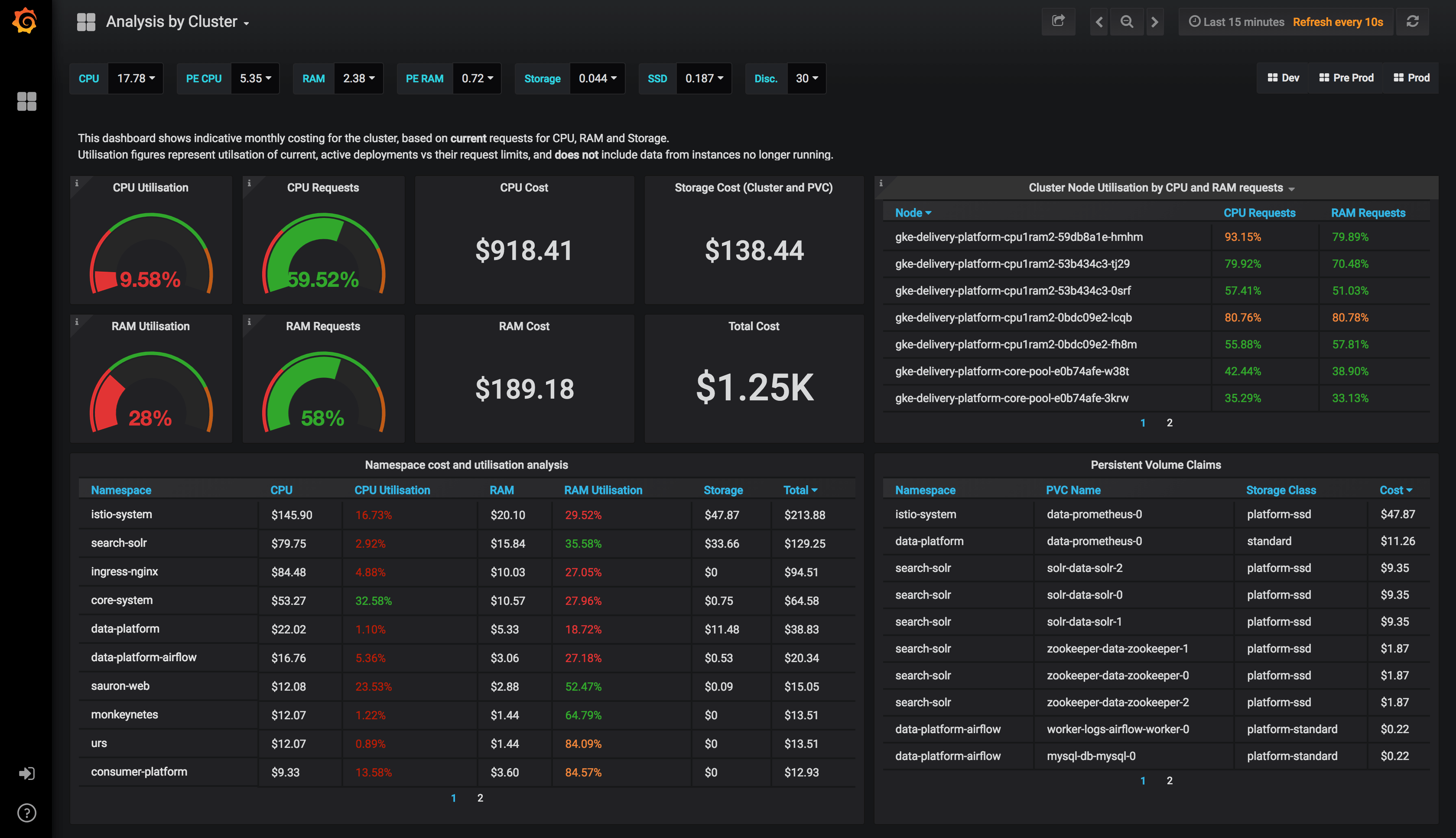

Measuring The Cost To Serve

Here is the first piece of wisdom. We need to figure this out. I will give you three different approaches

- Beginner

- Simply do it by hand sit, down with Cost Analytics/Explorer and figure out your transaction rates and do some rough calculations and either be pleasantly surprised or really shocked depending on what comes back you.

- Intermediate

- You gather these transaction volumes in real time from your systems. You may have instrumented using an APM (Application Performance Management) such as Azure Application Insights, New Relic, AWS X-Ray, Elastic APM but you still calculate this by hand

- Advanced

- You monitor in real time, but your calculations are also in real time. Leverage a platform such as Azure Event Hubs, Amazon Kinesis or Apache Kafka and derive this real time.

When you have this information, you can ask the question.

What’s my average transaction flow versus my average infrastructure cost? Then you can put it up in the corner and say, “hey developers, we need to optimise”

This becomes your measure, and you need to make this relevant to your business stakeholders

Operational Optimisation

How are you paying for public cloud? Using a credit card in a PAYG (Pay As You Go) model might be great to get you started but for both Microsoft Azure and Amazon Web Services it can be an expensive way to pay.

I am going to list a few bullet points for you to investigate

- Enterprise Agreement (Azure)

- Reserved Instances (Azure / AWS)

- Savings Plan (AWS)

- SPOT Instances (Azure / AWS)

You need to move away from paying on demand because this is the most expensive way to leverage public cloud. These savings can range from 15% through to 90% in comparison to on-demand. Typically, discounts apply either for commitment, giving cloud providers certainty or in the case of SPOT, your ability to leverage idle resources.

‘Reserved Instances’ and ‘Savings Plans’, whilst not groundbreaking, they allow you to minimise the cost of traditional architectures. My next piece of wisdom is to have a ‘Reserved Instance / Savings Plan’ % target.

Some of the best organisations I have seen in the past have had up to 80% of their IaaS resources covered by ‘Reserved Instances / Savings Plans’. If you don’t have a target, I suggest you look into this.

But before you make a purchase understand your workload. Understand the ebbs and flows of what is baseline load.

The rule of thumb is to assess a workload for 3 months, during the time right size accordingly.

Leverage Azure Monitor / Amazon CloudWatch with a combination of Azure Advisor / AWS Trusted Advisor. Tune your application.

Optimise The Humans – High Value vs. Low Value

Operational optimization. This is an interesting one because, how much time does one really think about one’s labor cost. You hire people, they do ‘stuff’ for you I pay them they come in. The thing is, cloud practioners cost a lot of money

To prove my point, I did a bit of an international investigation. What does it cost for a DBA (Database Administrator) per hour converted to US dollars around the world?

| Country | Cost Per Hour (USD) |

| USA | $65 |

| Australia | $55 |

| United Kingdom | $49 |

| Japan | $79 |

| Germany | $61 |

| Brazi | $75 |

This just the median DBA and none of us in here would ever work with just a median DBA, so we have established that people have a cost. But let’s think about what the actual meaning of this cost is.

Let’s look through the lens of something DBA’s do so often, a minor database engine upgrade. This is important as we should be upgrading our databases on a regular basis (security, features, performance).

But let’s look at the Azure MySQL / Amazon RDS which are both managed services for running relational databases in the cloud vs. running a database engine on IaaS.

| Self-Managed (IaaS) | Azure MySQL / Amazon RDS |

| Backup primary | Verify update window |

| Backup secondary | Create a change record |

| Backup server OS | Verify success in staging |

| Assemble upgrade binaries | Verify success in production |

| Create a change record | 1 Hour |

| Create rollback plan | |

| Rehearse in development | |

| Run against staging | |

| Run against production standy | |

| Verify | |

| Failover | |

| Run in production | |

| Verify | |

| 8 Hours Minimum |

Managed services, whilst on paper may be more expensive the administrative cost of performing undifferentiated heavy lifting is a far greater cost. I am saving time and i am going to get receive logs and an audit trail that I can attach it my change record for auditability.

You may say to me, well Shane we’re going spend that money anyway. I’ve got a high these people are not going away.

I would say that’s great, but you could invest that particular chunk of time into something else of greater business value like maybe tuning your database (query plans, index optimization). This is a better use of a DBA time

And with that, stay tuned to part 2 where we get real.

Summary

Public cloud brings a magnitude of opportunities to builders and architects. Are you looking for the pots of gold? They are there. Public cloud provides you a raft of new levers that you can pull, twist, pull to architect for the new world.

Climb the cloud maturity curve and achieve the same or better outcome at a lower cost.

Architectures can evolve, but it needs to make sense. What is the cost to change?

Join me in part 2 as we get deeper into Infrastructure and Architectural optimisations you can make.

Shane Baldacchino

This is awesome content Shane. It has Lego, Grafana, MySQL – everything! Thanks for sharing this.

Cant wait for follow up on this topic.

Thanks Vikas,

Apologies for the late reply, it got caught in the many many spam comments in WordPress.

Thanks for the message and yes, I better cracking on part 2