Are you like me? When something breaks, do you pull it apart to see how it ticks or do you place it in the bin?

Are there any obvious signs of magic smoke? I am sure you can guess the kind of person I am and as a result have a decently organised shed of lots of different weird and wonderful spare parts. From microswitches to relays, looms and beyond I Bower Bird a lot of things, as you never know when they will come in handy.

Recently I upgraded our WiFi, you can read about it here by using secondhand Cisco Aironet 3702i’s. One arrived DOA (Dead On Arrival) with no response via the console, the seller sent me another one. Then 2 weeks later, another access point failed. I could not believe it as I have never had a Cisco device fail, but it was stuck in a boot loop.

After jumping through my own loop to prove to the seller it was a hardware problem, I received another unit (make that 6 in total, 4 working, 2 dead). But with these 2 dead units, can I resurrect these from the dead and make a good unit?

In this post, come on a learning journey with me as I pull apart two dead Cisco 3702i’s and make one good unit from two dead ones.

The Problems

Unit 1: No console, nothing and a solid red status indicator. This was the first unit of the four units I tried and after pulling my hair out, I plugged my console cable into another Cisco 3702i and I received a prompt. I knew something had to be wrong, above and beyond the fact that I didn’t have a WLC (Wireless Lan Controller) as I have gone through the process of re-flashing an image many times before.

According to Cisco I was in more than a bit of trouble. DRAM failure, a clear-cut failure and not much I can do with my limited skills (DRAM is soldered to the main PCB).

| Ethernet LED | Radio LED | Status LED | Meaning |

| Red | Red | Red | DRAM Memory test failure |

| Off | Off | Blinking red and blue | Flash file system failure |

| Off | Amber | Alternating red and green | Environment variable (ENVAR) failure |

| Amber | Off | Rapid blinking red | Bad MAC address |

| Red | Off | Blinking red on and off | Ethernet failure during image recovery |

| Amber | Amber | Blinking red on and off | Boot environment error |

| Red | Amber | Blinking red on and off | No Cisco IOS image file |

| Amber | Amber | Blinking red on and off | Boot failure |

Unit 2: This was a bit of a strange one, and I am sure the seller either was wondering what I was doing or perhaps I was the cause of this failure. This exhibited as a boot loop.

I ended up having to create the seller a video to prove this was a hardware failure. A lot of hoops to jump through with the seller thinking a reflash would fix the problem.

And as you can see in the video, we are stuck in a boot loop, every 45 seconds it would crash due to a self-test failure.

Mar 1 00:00:40.511: %DOT11-6-GEN_ERROR: Error on Dot11Radio1 -

Radio interface bus down --

-Traceback= 11B5350z 182F794z 21B3DF8z 21B4720z 21C532Cz 181DB68z 190869Cz 190A21Cz 190969Cz 1827264z 183E428z 12CD044z 12CD224z 12CD390z 10C2644z 10C28BCz

*Mar 1 00:00:40.511: %DOT11-2-RADIO_FAILED: Interface Dot11Radio1, failed due to the reson code 44

-Traceback= 11B5350z 182ED90z 182F644z 182F7ACz 21B3DF8z 21B4720z 21C532Cz 181DB68z 190869Cz 190A21Cz 190969Cz 1827264z 183E428z 12CD044z 12CD224z 12CD390z

*Mar 1 00:00:40.511: %SOAP_FIPS-2-SELF_TEST_RAD_FAILURE: RADIO crypto FIPS self test failed at AES 128-bit CCM encrypt on interface Dot11Radio 1 -Process= "Init", ipl= 0, pid= 3

*Mar 1 00:00:40.511: %SOAP_FIPS-2-SELF_TEST_RAD_FAILURE: RADIO crypto FIPS self test failed at AES 128-bit CCM decrypt on interface Dot11Radio 1 -Process= "Init", ipl= 0, pid= 3

00:00:42 UTC Mon Mar 1 1993: Unexpected exception to CPU: vector 700, PC = 0x1345E64 , LR = 0x1345E64

-Traceback= 0x1345E64z 0x1345E64z 0x183E428z 0x12CD044z 0x12CD224z 0x12CD390z 0x10C2644z 0x10C28BCz 0x13472D4z 0x132E05Cz

CPU Register Context:

MSR = 0x00029200 CR = 0x24244022 CTR = 0x011B48E0 XER = 0x00000000

R0 = 0x01345E64 R1 = 0x048C9500 R2 = 0x00010001 R3 = 0x048CB2EC

R4 = 0x00000000 R5 = 0x00000000 R6 = 0x011B534C R7 = 0x00000000

R8 = 0x00000002 R9 = 0x00000000 R10 = 0x039A0000 R11 = 0x039A0000

R12 = 0x24244024 R13 = 0x26D50848 R14 = 0x00000001 R15 = 0x03A49E24

R16 = 0x03A49E7E R17 = 0x00000000 R18 = 0x0000004C R19 = 0x00000000

R20 = 0x000000FF R21 = 0x00000004 R22 = 0x093A1798 R23 = 0x09361798

R24 = 0x021B0C68 R25 = 0x092B0418 R26 = 0x00000001 R27 = 0x093904F4

R28 = 0x092B2184 R29 = 0x037362B8 R30 = 0x0372F9E4 R31 = 0x00000000

TEXT_START : 0x01003000

DATA_START : 0x03000000

MCSRR0: 0x01498850, MCSRR1: 0x00000000 MCSR: 0x00000000 MCAR: 0x800010FC

Total PCI 0 MC Error = 0; PCI LD count=0

Total PCI 1 MC Error = 1024; PCI LD count=0

Total PCI 2 MC Error = 0; PCI LD count=0

Writing crashinfo to flash:crashinfo_19930301-000042-UTC

Nested exception_rom_monitor call (2 times)

Unexpected exception to CPUvector 700, PC = 1345E64

-Traceback= 0x1345E64z 0x1345E64z 0x183E428z 0x12CD044z 0x12CD224z 0x12CD390z 0x10C2644z 0x10C28BCz 0x13472D4z 0x132E05Cz

Exception (700)! Program

SRR0 = 0x01499238 SRR1 = 0x00029200 DEAR = 0x00000000

CPU Register Context:

Vector = 0x00000700 PC = 0x01345E64 MSR = 0x00029200 CR = 0x24244022

LR = 0x01345E64 CTR = 0x011B48E0 XER = 0x00000000

R0 = 0x01345E64 R1 = 0x048C9500 R2 = 0x00010001 R3 = 0x048CB2EC

R4 = 0x00000000 R5 = 0x00000000 R6 = 0x011B534C R7 = 0x00000000

R8 = 0x00000002 R9 = 0x00000000 R10 = 0x039A0000 R11 = 0x039A0000

R12 = 0x24244024 R13 = 0x26D50848 R14 = 0x00000001 R15 = 0x03A49E24

R16 = 0x03A49E7E R17 = 0x00000000 R18 = 0x0000004C R19 = 0x00000000

R20 = 0x000000FF R21 = 0x00000004 R22 = 0x093A1798 R23 = 0x09361798

R24 = 0x021B0C68 R25 = 0x092B0418 R26 = 0x00000001 R27 = 0x093904F4

R28 = 0x092B2184 R29 = 0x037362B8 R30 = 0x0372F9E4 R31 = 0x00000000

Stack trace:

PC = 0x01345E64, SP = 0x048C9500

Frame 00: SP = 0x048C9518 PC = 0x01345E64

Frame 01: SP = 0x048C9530 PC = 0x01827280

Frame 02: SP = 0x048C95F8 PC = 0x0183E428

Frame 03: SP = 0x048C9610 PC = 0x012CD044

Frame 04: SP = 0x048C9630 PC = 0x012CD224

Frame 05: SP = 0x048C9650 PC = 0x012CD390

Frame 06: SP = 0x048C9690 PC = 0x010C2644

Frame 07: SP = 0x048C97A0 PC = 0x010C28BC

Frame 08: SP = 0x048C97A8 PC = 0x013472D4

Frame 09: SP = 0x00000000 PC = 0x0132E05C

When 2 Become 1

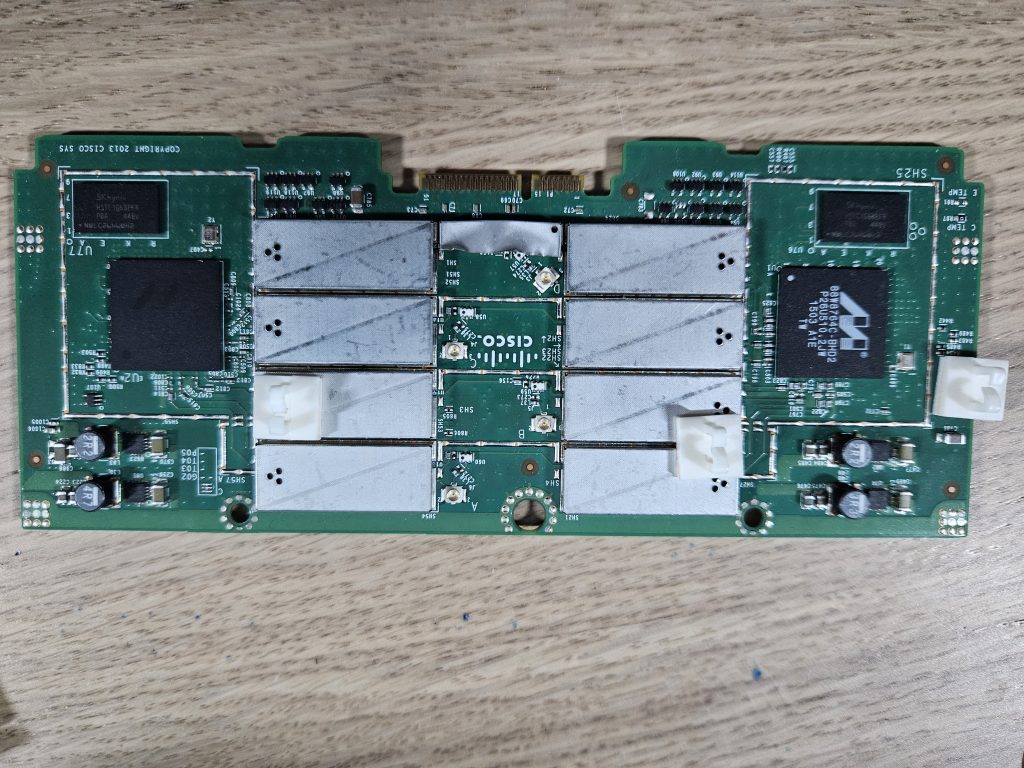

This got me thinking. After the first unit failure (DRAM failure) I used the relevant Torx driver (T15) and pulled the unit apart. Why? I am always intrigued in the build quality of high-end pieces of equipment.

From RF(Radio Frequency) isolation in the form of shields through to heavy copper traces to isolate noise, they are almost a thing of beauty when cost is not the primary driver influencing design decisions.

But upon opening, i noticed two PCBs sandwiched with a connector. One board had the PowerPC CPU, RAM and physical interfaces and the other connected all the radio antennas.

Cast aluminum, gasket seals, no plastic tabs to be seen, a really well built unit.

One device failed with bad DRAM and zero console output and the other failed with a Radio FIPS error. Two unique failures on two different PCBs. I am sure you know where I am going.

Is Cisco like Apple tying hardware modules to each other, making repair almost impossible without re-flashing the firmware of specific packages? The answer is no. Kudos Cisco.

By combining the radio module from the board with failed DRAM into the board with a FIPS Radio error I assembled one good unit, from two non-working units and prevented some e-waste at the same time.

There were no errors in the boot-up process, no messages about mismatched boards and no firmware to flash and whilst I have a picture of my oscilloscope on my workbench, it was only for show 😎

Summary

This is just one example of when saving parts from failed units has gotten me out of trouble. Whilst I am not encouraging hording, keeping common parts for even a few weeks can often get you out of a bind. In this example, it doesn’t get any easier and by using a bit of common sense you too can prevent items ending up as e-waste.

It’s broken anyway, so be curious, pull it apart and see what makes it tick and before too long you will pick up a new skill or two.

Shane Baldacchino

Hello. I bought 2 Cisco 3702, but one arrived broken, with the same error of your second unit: one of two radios broken and bootloop.

I’m sure it’s the WiFi module broken, because I swapped with the other unit and the problem swapped also.

The seller sent me another unit, working, but I’m trying to do something with the broken unit to avoid to trash it.

I found that is almost usable as 2.4Ghz only AP: with old version of standalone firmware, or connected to a WLC it’s working without bootloop, but obviously without 5Ghz radio…

Not so good, but…

Yeah interesting Piero, I was thinking I was the only person with that issue. I had two broken units and was lucky enough to combine in to one.

Good luck and thanks for the comment.